Packet Processing in Linux Kernel

When packets are arrived at NIC, it sends an interrupt request (IRQ) to the CPU. The CPU then stop working task (like computing) it was performing to receive the packets. Interruptions mean reducing the CPU’s ability to perform its primary computational tasks.

In case of VM, this problem is increased even further. In case of visualization the CPU gets interrupted twice. First when packets enter into the NIC and send interrupt request to the CPU used to run hypervisor and second when packets are sorted out based on MAC or VLAN ID, it sends interrupt request to the CPU of the particular VM.

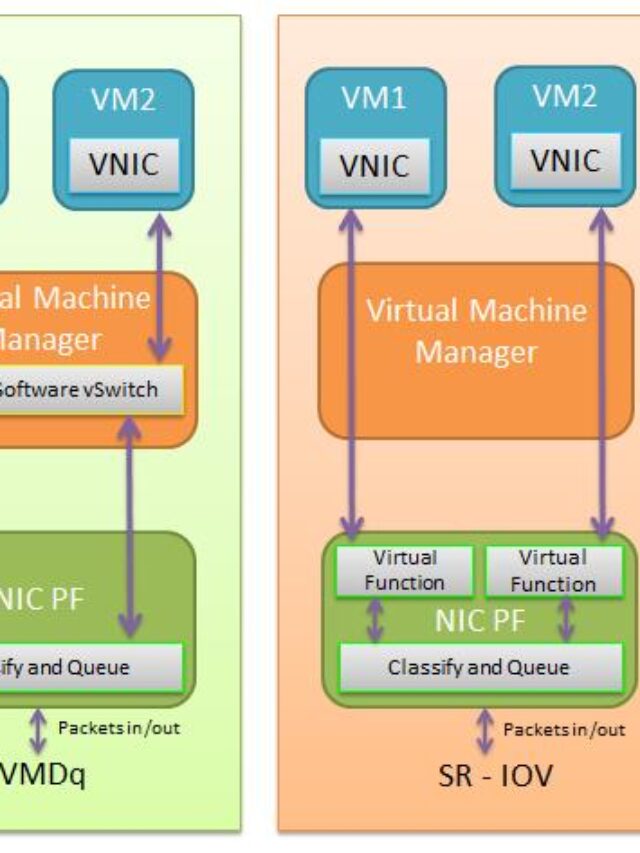

So, there is performance impact due to this double CPU interruption. Particularly first CPU interruption is very critical and it can be overloaded easily. We can avoid this problem with the Intel VMDq (Virtual machine device queues) solution. Here, VMDq allows hypervisor to assign separate queue in the NIC for each and every VM. In this way we are eliminating first interruption. Only the CPU core hosting the destination VM needs to be interrupted. The packet is only touched once, as it is copied directly into the VM user space memory.

So, now we have removed the bottleneck with the help of VMDq technology. Since hypervisor is in the path, we can leverage vswitch capability as well.

We have improved performance quite with VMDq but this is not enough for data plan centric virtualized network function (VNF) where throughput requirement is more, almost line rate.

To fulfill this line rate requirement for the VNF, PCI-PT and SR-IOV comes into the picture.

What is PCI-Passthrough?

In PCI-pass through method, we are bypassing the vswitch and virtualization layer. Here, we are directly assigning physical NIC port to the VM. No interruption at all. Performance is almost line rate.

The problem with PCI-PT’s are static port binding and number of physical switch port and server NIC port requirement increased as we need separate NIC port for each VM. VM mobility scope is reduced due to static port binding. VNF need to support NIC driver. Here, NIC vendor lock in scope is also increased.

Let’s say one VNF performance in 50Gbps and it has 100G NIC port. Here we are wasting our link bandwidth. To avoid such problem SR-IOV comes into the picture.

What is SR-IOV?

SR-IOV allows us to create a single physical NIC port to multiple physical NIC port. SR-IOV uses physical function (PF) and virtual function (VF). No interruption at all. Performance is almost line rate.

Physical function (PF):

PF is full PCIe function that is capable to configure and manage SR-IOV function. PF configure and manage SR-IOV functionality by assigning VF’s. PF is fully capable of transferring data IN and OUT of the pf device.

Virtual function (VF):

VF is lightweight PCIe function that only processes I/O. VF’s are derived from the PF’s. We can create many VF’s from single PF that can be used to different VM. Number of VF’s configuration is depending on NIC support.

The VF in the NIC is then given a descriptor that tells it where the user space memory, owned by the specific VM it serves, resides. Once received on the physical port and sorted (again by MAC address, VLAN tag or some other identifier) packets are queued in the VF until they can be copied into the VMs memory location.

This interrupt-free operation liberates the VM’s CPU core to perform the compute operations. Performance is almost line rate. Hypervisor and VNF need to support NIC driver. Here, NIC vendor lock in scope is also increased.

DPDK (Data Plan Development Kit)

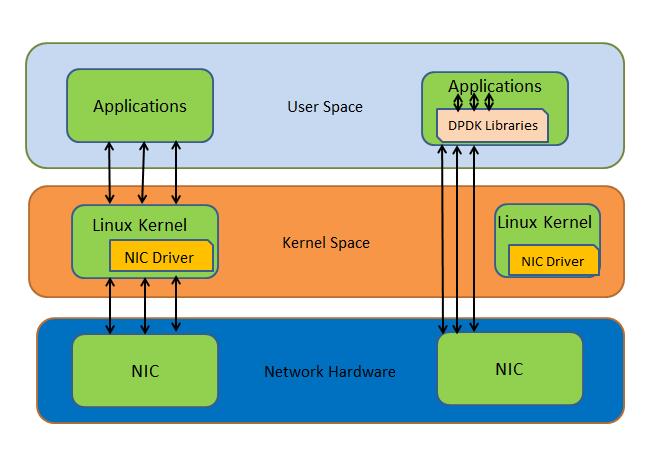

Data Plan Development Kit consists of set of standard libraries and optimized NIC drivers which are used to accelerate packet processing on multiple CPU core. DPDK provides rapid network application development which is required for fast packet processing. DPDK uses instruction set architectures; DPDK provides more efficient computing than standard interrupt processing available in the kernel. DPDK also works with Cloud Databases, which highlights the use of a database as a service.

DPDK can be employed in any network function built to run on Intel architectures, OVS is the ideal use case.

The Data Plane Development Kit contains memory, buffer and queue managers, flow classification engine and a set of poll mode drivers. Similar to the Linux New API (NAPI) drivers, it is the DPDK poll mode drivers that perform the all important interrupt mitigation like what we have seen in SR-IOV. As a result of interruption free performance is increased significantly. In the poll mode operation, the kernel checks the interface periodically instead of waiting for interruption when traffic volume is high. If there is no packets at all in the interface then poll operation may lead latency and jitter. To avoid this DPDK can be configured to switch to interrupt mode when incoming packets goes below a threshold value.

We can also implement zero copy DMA into large first IN and first OUT (FIFO) ring buffers located in user memory with the help of queue, memory and buffer managers of DPDK. Packet processing performance improved dramatically. It helps application to handle packet processing more efficiently, even in case of high volume traffic, CPU’s are busy with application processing, it can leave packets in the buffer without losing the packets.